Introduction

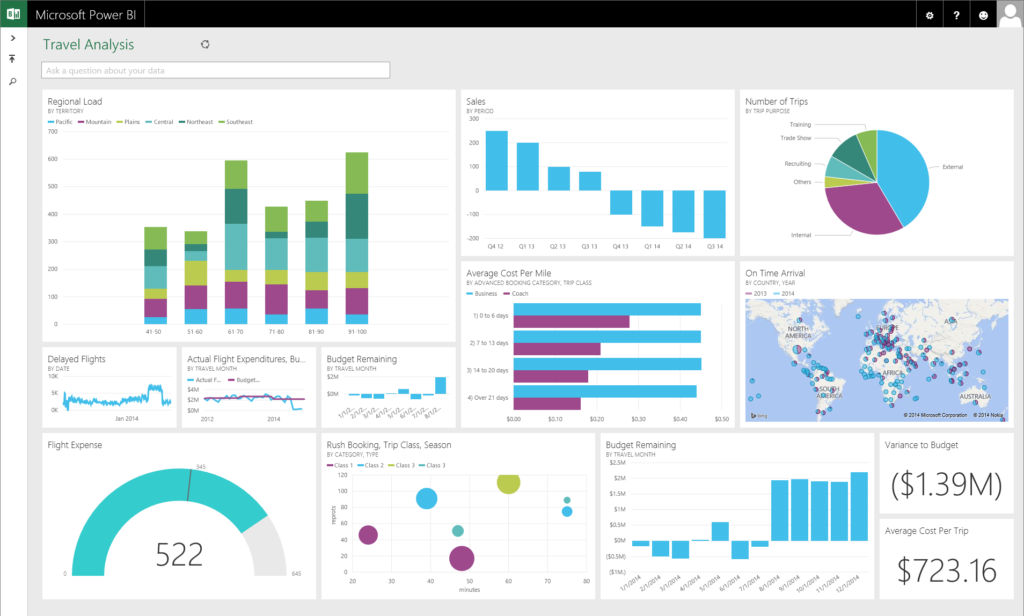

I developed the IMDb Top 250 Movies Scraper using Python to automate the extraction of detailed information about the top 250 movies listed on IMDb. By leveraging Selenium for web automation and pyodbc for database interactions, this tool efficiently gathers comprehensive data and stores it in a SQL Server database. The primary goal is to visualize this data in Power BI, creating insightful reports such as “Movies Rating through Rating-Count,” “Director Rating Count out of IMDb Rating in Top 250 Movies,” “Stars Rating in Top 250 Movies through IMDb Rating,” and various “Top Box Office” dashboards.

Project Overview

In this project, I’ll guide you through the entire process of extracting movie data from IMDb, storing it in an SQL database, and visualizing it using Python’s powerful libraries. Whether you’re a data enthusiast, a developer, or a researcher, this comprehensive guide will equip you with the skills to automate data collection, manage it efficiently, and create meaningful visual representations.

Project Breakdown:

- Automating Data Extraction with Selenium:Leveraging Selenium to scrape detailed information from IMDb’s Top 250 Movies.

- Efficient Data Management with SQL Server: Storing the scraped data in a structured SQL database for easy access and manipulation.

- Visualizing Data with Power BI:In this final phase, we will harness the power of Power BI to transform our analysis into engaging visual representations. After collecting and storing the data, we will utilize Power BI’s robust features to design interactive dashboards. By creating various charts including column, donut, stacked area, and decomposition tree, we can effortlessly explore and interact with our insights.

By the conclusion of this project, you will have learned how to automate data gathering, manage it effectively, and create visuals that breathe life into your data. Let’s dive in!

Why This Project Matters

The primary use case for this scraper is to scrap the IMDb’s Top 250 movies. Specifically, I aimed to extract the following details for each movie:

- Title

- Year

- Runtime

- Movie Time

- Rating Count

- IMDB_Rating

- Description

- Stars

- Directors

- ImageURL

Once extracted, this data is stored in a SQL Server database, which serves as the data source for Power BI. The visualization objectives include:

- Movies Rating through Rating-Count Report:Analyze the distribution of IMDb ratings against the number of ratings each movie has received.

- Director Rating Count out of IMDb Rating in Top 250 Movies:Assess the performance of directors based on the IMDb ratings of their movies within the Top 250 list.

- Stars Rating in Top 250 Movies through IMDb Rating:Evaluate the performance of actors and actresses by analyzing their movies’ IMDb ratings.

- Top Box Office Dashboards:

- Top Box Office Weekly Gross Out of Total Gross in Total Number of WeeksReport: Track weekly gross earnings of top movies against their total gross over multiple weeks.

- Maximum Rating Count out of IMDb Rating in Box Office:Identify movies with the highest number of ratings in relation to their IMDb ratings within box office performances.

- Stars Rating Count out of Total IMDb Rating in Box Office:Analyze how actors’ or actresses’ movies perform in terms of IMDb ratings within the box office segment.

- Directors Rating Count out of Total IMDb Rating in Box Office:Assess directors’ performance based on the IMDb ratings of their box office movies.

Tools and Technologies for my Project

In this project, several key tools and technologies were utilized to achieve efficient data scraping, storage, and visualization.

Selenium:

It was employed to automate browser interactions for scraping data from websites like IMDb. It enabled seamless navigation through web pages, extraction of data using specific selectors, and interaction with various web elements, significantly reducing the manual effort required to gather comprehensive movie details.

WebDriver Manager:

It was integrated to manage the browser driver automatically. It streamlined the process by downloading and configuring the correct version of ChromeDriver, which eliminated the need for manual handling and ensured compatibility with the installed version of Google Chrome. This not only saved time but also minimized potential errors related to driver mismatches.

Pyodbc:

To connect the project to an SQL database, pyodbc was utilized. This library facilitated the storage and retrieval of scraped movie data in a structured format within the database. By providing a reliable interface to interact with SQL Server, pyodbc ensured that data could be efficiently managed, queried, and maintained for future analysis.

Pandas(pd):

Lastly, Pandas (pd) played a crucial role in manipulating and analyzing the scraped data. With its powerful data structures and functions, Pandas allowed for the cleaning, transformation, and preparation of data for visualization and storage in the SQL database. This ensured that the data was in an optimal state for generating insightful visualizations and performing detailed analyses.

Section 1: Extracting Data from IMDb with Selenium

Understanding Selenium:

Selenium employs the WebDriver protocol to automate processes on widely-used browsers like Firefox, Chrome, and Safari. This automation can be executed locally for tasks like testing web pages or remotely for purposes such as web scraping.

Preparing for the Setup

Here’s a step-by-step guide on how to use Selenium, with the example of extracting data from IMDb.

Step 1 — Install and Imports

First, install the required Python packages:

pip install selenium webdriver-manager pyodbc

Once the installation is complete, proceed with the imports:

import time

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.common.by import By

from webdriver_manager.chrome import ChromeDriverManager

import pyodbc

Step 2: Install and Access WebDriver

To automate browser actions, a WebDriver is essential. It opens the browser and interacts with websites. For Chrome users, you will need the ChromeDriver, which can be downloaded based on your browser version. To check your Chrome version, follow these steps:

- Click the three dots in the top-right corner of the browser.

- Navigate to Help > About Google Chrome.

This will display your version.

You must know where the WebDriver is stored on your computer, typically in the Downloads folder. Create a driver variable pointing to the file path where the WebDriver is saved:

driver = webdriver.Chrome('/Users/MyUsername/Downloads/chromedriver')

Note: If you haven’t installed ChromeDriver, you can do it automatically in your script using

service = Service(ChromeDriverManager().install())

This command handles the download and installation of the appropriate version of ChromeDriver for your browser automatically. It streamlines the process, saving time and making sure you always have the correct version, so you don’t have to worry about updating it manually.

Note: When scraping websites like IMDb, they often block automated bots from accessing their content. To get around this, we can make our script look like a regular browser by including a User-Agent header. This header imitates the behavior of a real user browsing the site. For instance, the following line:

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/129.0.0.0 Safari/537.36'

}

Step 3 — Navigating to IMDb with Python

This step is straightforward but crucial in the web scraping process. To start scraping, you need your Python code to open the IMDb website (or any other site you want to scrape). With Selenium, the browser will automatically load the specified page, giving you the ability to interact with it and extract the needed data. By setting it up correctly, your script will behave like a regular user browsing the site, which is important when dealing with dynamic websites like IMDb.

driver.get(Website_Url_you_want_to_scrape)

Let’s dive into the project!

To begin, we need to access IMDb’s Top Movies page. The initial step involves obtaining the URL of the IMDb page from which we’ll pull data. In this instance, we are targeting the Top 250 Movies section.

Here’s how to retrieve the correct URL:

- Launch your web browser.

- Head over to the IMDb website (imdb.com).

- Find and open the “Top 250 Movies” chart page.

With the page open, you’ll be ready to scrape the movie information.

In this step, the function scrape_imdb_top_250(): is defined to scrape movie details, and a Chrome WebDriver is configured using Selenium along with WebDriver Manager. To prevent IMDb from detecting and blocking the script as a bot, a user-agent header is added, making the request appear as if it’s coming from a regular browser session.

def scrape_imdb_top_250():

url = 'https://www.imdb.com/chart/top/'

# Set up the Chrome WebDriver using WebDriver Manager

options = webdriver.ChromeOptions()

options.add_argument(f"user-agent={headers['User-Agent']}")

service = Service(ChromeDriverManager().install())

driver = webdriver.Chrome(service=service, options=options)

driver.get(url)

time.sleep(3)

The driver.get(url) method directs the Chrome browser to open the IMDb page. To make sure the full page content is loaded before we start extracting any data, we include a short pause using time.sleep(3).

Extracting Movie Information

Once the page has fully loaded, we can begin locating and extracting important movie details. This includes the movie’s title, IMDb rating, release year, duration, runtime, vote count, description, poster URL, stars, and director.

We utilize Selenium to capture all the movie elements by targeting their class names. This generates a list of movies that we can loop through to retrieve the necessary details for each one.

# Find all movies listed in the Top 250 table

movies = driver.find_elements(By.CLASS_NAME, 'ipc-metadata-list-summary-item__c')

movie_list = []

Retrieve Movie Details

In this phase, we pull out the movie title. The script checks if the title has a numeric prefix followed by a period (like “1. The Shawshank Redemption”) and removes the number, leaving only the name. If there’s no number, the full title is used as it is.

Since the title often includes a number, we split it into two parts and extract only the text portion for further use.

1. Movie Title:

for movie in movies:

# Extract title

title_column = movie.find_element(By.CLASS_NAME, 'ipc-title__text')

full_title = title_column.text.strip()

# Check if the title contains a period before splitting

if '.' in full_title:

title = full_title.split('.', 1)[1].strip()

else:

title = full_title # If no period, use the entire title

2. Retrieve Release Year:

# Extract year

if len(inline_list_items) > 0:

year = inline_list_items[0].text.strip()

year = int(year) if year.isdigit() else None

else:

Here, we extract the year of the movie's release using the XPath. If the year is available, it's converted into an integer. Otherwise, it's set as None. year = None

3. Extract IMDB rating:

imdb_rating = movie.find_element(By.CLASS_NAME, 'ipc-rating-star--rating').text.strip()

imdb_rating = float(imdb_rating) if imdb_rating else None

4. Retrieve run time:

if len(inline_list_items) > 2:

runtimeGen = inline_list_items[2].text.strip()

else:

runtimeGen = 'N/A'

5. Retrieve Rating Count:

rating_count = movie.find_element(By.CLASS_NAME, 'ipc-rating-star--voteCount').text.strip() if movie.find_elements(By.CLASS_NAME, 'ipc-rating-star--voteCount') else 'N/A'

6. Retrieve Details:

In the screenshot above, the arrow indicates a button that you can click to reveal the Description, Director, and Stars data. When viewing the initial image of the Top 250 Movies, you’ll notice that this information isn’t visible. To access it, we must click that button first. This action instructs Selenium on how to proceed; otherwise, it won’t be able to scrape the required data.

To achieve this, you can add the following code snippet to the function we previously defined, scrape_imdb_top_250():

# Click the button to trigger the detailed view

try:

# Locate the button by ID and click it

button = driver.find_element(By.ID, 'list-view-option-detailed')

button.click()

time.sleep(2) # Wait for the page to load the detailed view

except Exception as e:

print(f"Error clicking the button: {e}")

7. Extract Directors and Stars Details:

director = movie.find_element(By.XPATH, './/span/a[@class="ipc-link ipc-link--base dli-director-item"]').text.strip() if movie.find_element(By.XPATH, './/span/a[@class="ipc-link ipc-link--base dli-director-item"]') else 'N/A'

stars = [star.text.strip() for star in movie.find_elements(By.XPATH, './/span/a[@class="ipc-link ipc-link--base dli-cast-item"]')

This step scrapes the director’s name and the list of starring actors for each movie using the XPath for each element. The stars are returned as a list of names.

8. Extract the Movie URL:

image_element = movie.find_element(By.XPATH, './/div/img[@class="ipc-image"]')

image_url = image_element.get_attribute('src')

Ultimately, the script retrieves the URL of the movie poster by pulling the src attribute from the image tag.

9. Compile Movie Information:

movie_list.append({

'Title': title,

'Year': year,

'Time': MovieTime,

'Runtime': runtimeGen,

'IMDb Rating': imdb_rating,

'Rating Count': rating_count,

'Description': description,

'Director': director,

'Stars': ", ".join(stars),

'Image URL': image_url

})

After scraping all the details for a movie, the information is organized into a dictionary and added to the movie_list. By the end of the script’s execution, this list will hold all the collected movie data.

10. Close the web driver:

driver.quit()

return movie_list

Part 2 – Storing Data in SQL Database

Establishing the Database Connection:

In this stage, we define the insert_into_database function. Within this function, we create a connection to a SQL Server using the pyodbc library. The connection string utilizes the “ODBC Driver 17 for SQL Server” and specifies your server name (e.g., Your_Server_Name\SQLEXPRESS), the database name (e.g., Your_DataBase_Name), and employs Windows Authentication by setting Trusted_Connection=yes.

This setup enables us to securely connect to the database for inserting our scraped movie data.

# Function to insert data into SQL Server

def insert_into_database(movies):

# SQL Server connection setup with Windows Authentication

connection = pyodbc.connect(

'DRIVER={ODBC Driver 17 for SQL Server};'

'SERVER= Add_Your_SQL_ServerName;' # Add your SQL server name

'DATABASE=Add_Database_Name;' # SQL your Database name

'Trusted_Connection=yes;'

)

When connecting to a SQL Server, there are generally two methods of authentication available:

- Windows Authentication:This method allows users to connect to the SQL Server using their Windows credentials. It’s a secure option as it relies on the existing Windows user accounts and is typically recommended for environments where users are already authenticated within a Windows domain.

- SQL Server Authentication:This method requires users to log in with a specific username and password set up in the SQL Server. It is useful for applications that need to connect to the database without relying on Windows accounts, but it can be less secure if not managed properly.

Establishing the Cursor and Verifying Table Existence:

The cursor is utilized to execute SQL commands. In this step, we verify whether a table named IMDB_Top_250_Movies exists in your database.

If the table is not present, it will be created with the following fields:

id: An auto-incrementing primary key.

Title: The title of the movie (text, mandatory).

Year: The year of release (integer).

MovieTime: The duration of the movie (e.g., ‘2h 14min’).

Runtime: The movie’s rating (e.g., ‘PG’, ‘R’).

IMDB_Rating: The IMDb rating (e.g., 8.5).

Rating Count: The total number of votes (e.g., ‘1.2M’).

Description: A brief description of the movie.

Director: The name of the movie’s director.

Stars: The main stars, stored as a string separated by commas.

ImageURL: The URL of the movie’s poster image.

cursor = connection.cursor()

# Create table if it doesn't exist

cursor.execute('''

IF NOT EXISTS (SELECT * FROM sysobjects WHERE name='IMDB_Top_250_Movies_1' AND xtype='U')

BEGIN

CREATE TABLE IMDB_Top_250_Movies (

id INT IDENTITY(1,1) PRIMARY KEY,

Title NVARCHAR(255) NOT NULL,

Year INT,

MovieTime NVARCHAR(10),

Runtime NVARCHAR(10),

IMDB_Rating FLOAT,

Rating_Count NVARCHAR(20),

Description TEXT,

Director VARCHAR(255),

Stars VARCHAR(255),

ImageURL NVARCHAR(MAX)

)

END

''')

Inserting Data into the Table:

The loop below goes through each movie in the movies list, which is provided to the insert_into_database function. For every movie, it inserts the movie details (such as title, year, runtime, rating, and more) into the database. Placeholders (?) are utilized for the values, and the corresponding movie data (like movie[‘Title’], movie[‘Year’], etc.) is supplied in the correct sequence.

# Insert data into the table

for movie in movies:

cursor.execute('''

INSERT INTO IMDB_Top_250_Movies_1 (Title, Year, MovieTime, Runtime, IMDB_Rating, Rating_Count, Description, Director, Stars, ImageURL)

VALUES (?, ?, ?, ?, ?, ?, ?, ?, ?, ?)

''',

movie['Title'],

movie['Year'],

movie['Time'],

movie['Runtime'],

movie['IMDb Rating'],

movie['Rating Count'],

movie['Description'],

movie['Director'],

movie['Stars'],

movie['Image URL']

)

Committing Changes and Closing the Connection:

connection.commit()

cursor.close()

connection.close()

Once all movie data has been inserted into the database, connection.commit() is called to save the changes. After that, the cursor and database connection are closed.

Main Function – Executing the Scraper and Storing Data in SQL Server

In this final step, we establish the main entry point of our Python script. Here’s the process:

- Data Scraping:The script invokes the scrape_imdb_top_250() function to gather data from IMDb’s Top 250 movies list. If the data collection is successful, it outputs the total number of movies scraped.

- Storing Data in SQL Server:If movies are scraped successfully, the script then calls the insert_into_database() function, which inserts the scraped movie details into the SQL Server database.

Error Handling: If no data is scraped (in case of an error or failure), the script will notify you by printing “No data scraped.”

connection.commit()

cursor.close()

connection.close()

Conclusion

In this blog, you’ve discovered how to extract movie data from IMDb’s Top 250 list and save it in a SQL Server database using Python. We covered the entire process, including setting up a web scraper with Selenium, managing the data you collected, and inserting it into the database with Windows Authentication for smooth integration. You’ve now equipped yourself with the skills to automate web scraping and data management effectively!

Whether you’re tackling a personal project or developing a professional application, this method can be tailored to suit a wide range of situations. It can handle everything from basic data gathering to more intricate web scraping tasks.